Explores how AI mimics human thinking via information theory and Shannon's model, emphasizing emotional decision-making and limitations in intimacy. Critiques surveillance capitalism where user data becomes the product in AI-driven platforms. Warns against over-relying on AI predictions amid noise and uncertainty.

Key Points

- 1.Human thinking fuses logic, morals, imagination; AI uses statistical pattern prediction via embeddings and neural nets

- 2.Shannon model: AI processes info by reducing uncertainty but struggles with noise and true authenticity

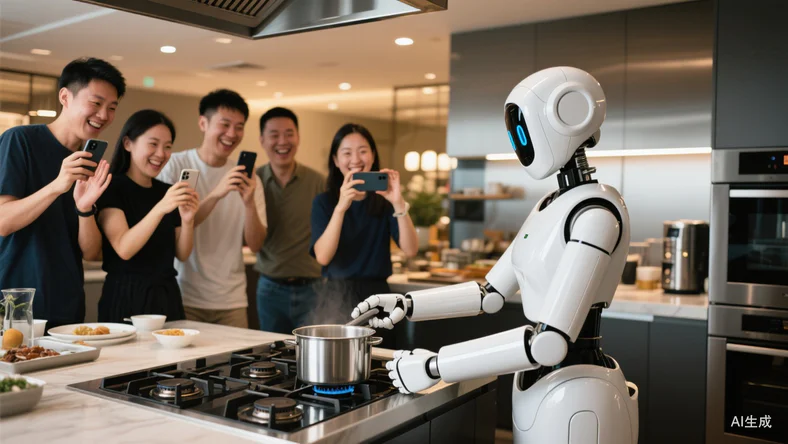

- 3.Surveillance capitalism: Free AI/social apps sell user attention/data, fostering addiction via algorithms

- 4.AI can't replicate human intimacy due to lack of shared real-world experiences

Impact Analysis

Highlights AI's statistical strengths but philosophical limits, urging caution in deploying agents for human-like tasks. Reinforces need for ethical data practices in business models.

Technical Details

AI as sender/receiver in Shannon model: embeds raw data into vectors, propagates through noisy neural channels, decodes via training to minimize entropy.