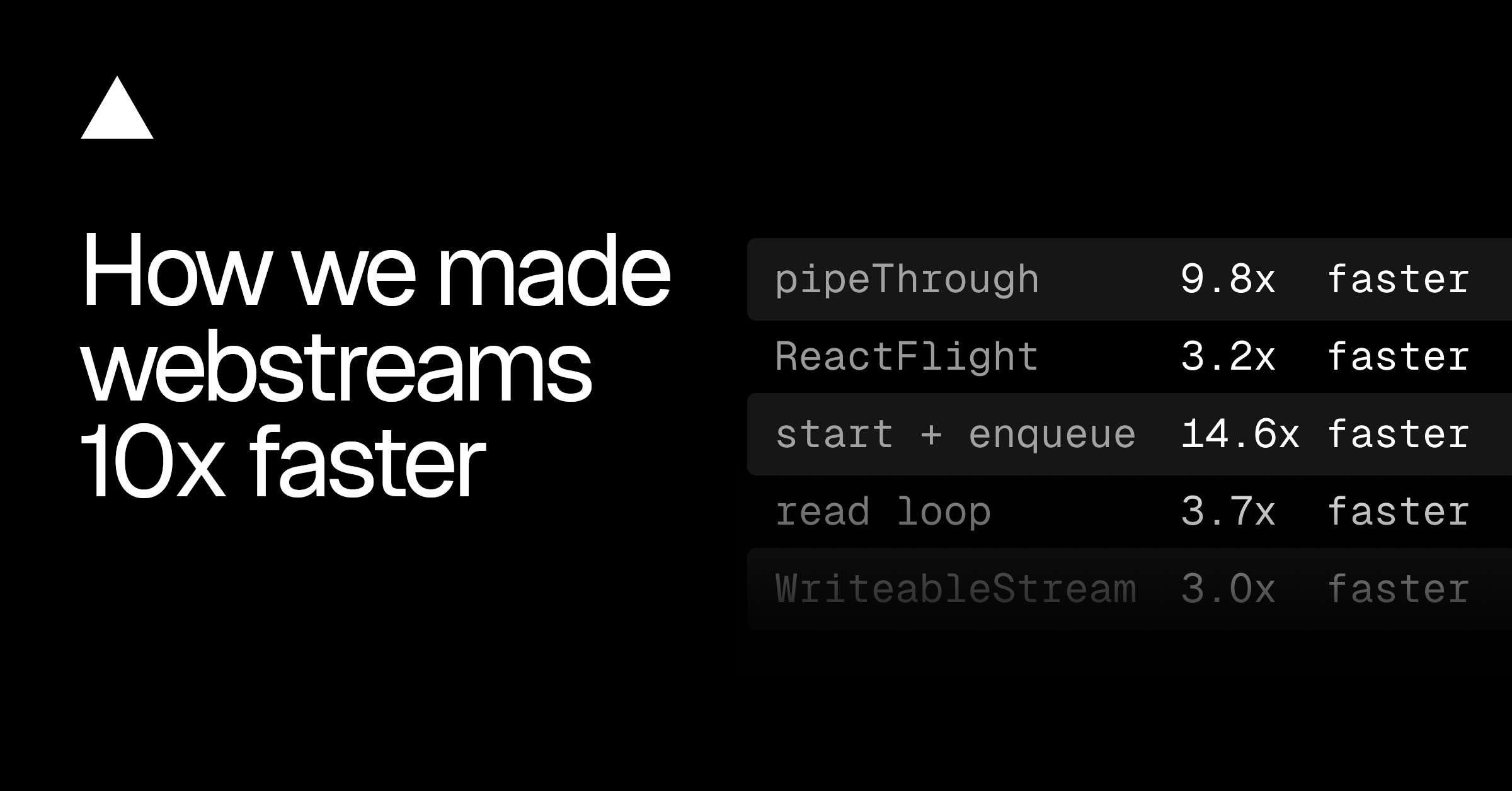

Vercel profiled Next.js server rendering and identified WebStreams as a major bottleneck due to Promise chains and allocations. They developed fast-webstreams library, reimplementing WHATWG Streams APIs on optimized Node.js streams backend for 12x speedup. The work is upstreaming to Node.js via PR.

Key Points

- 1.WebStreams dominate Next.js SSR flamegraphs with Promise and allocation overhead

- 2.Native Node.js WebStreams 12x slower than legacy streams at 630 MB/s vs 7,900 MB/s

- 3.fast-webstreams matches WHATWG API but uses fast paths backed by Node.js streams

- 4.AI-based test-driven reimplementation for server-side performance

- 5.Upstreaming to Node.js via Matteo Collina's PR

Impact Analysis

Boosts streaming performance in Next.js and React SSR, critical for real-time AI apps like chat interfaces. Reduces framework overhead highlighted in benchmarks. Enables faster server responses at scale.

Technical Details

reader.read() incurs 4 allocations and microtask even with buffered data. pipeTo() creates per-chunk Promise chains and {value, done} objects. fast-webstreams routes to fast paths, removing overhead for server piping.